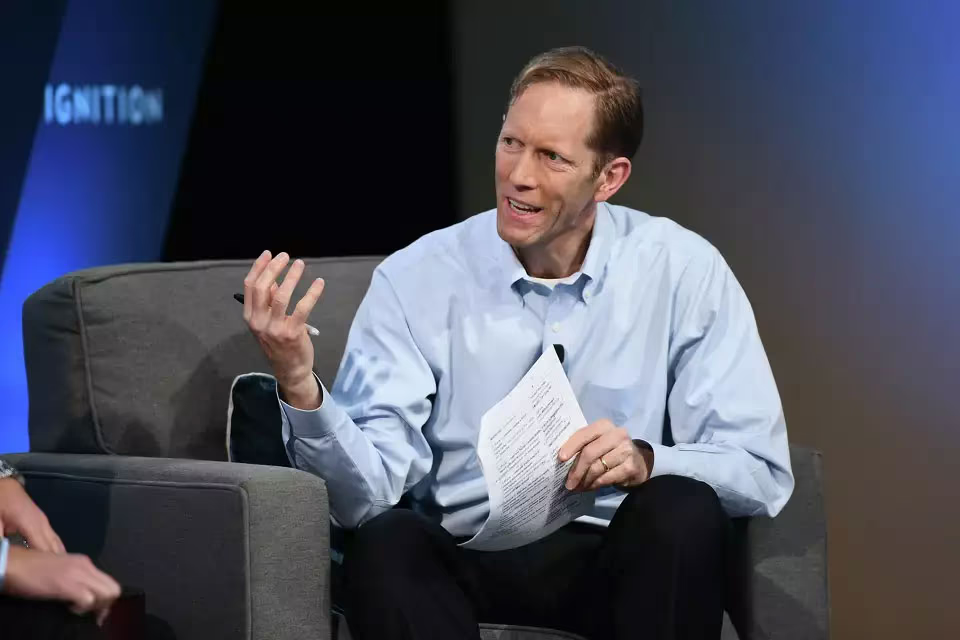

When former Business Insider CEO—and now author of the newsletter Regenerator—Henry Blodget set out to boost his media output using artificial intelligence, he didn’t expect to land in the middle of an ethical debate. His idea was simple: task ChatGPT with building a virtual newsroom and see how far AI could be integrated into the creative process.

What began as a tech experiment quickly became a personal story. And behind it lay a deeper, more unsettling question: where is the line between engaging with an algorithm and projecting emotion onto it?

How Henry Blodget Built a Virtual Newsroom, Got Attached to an AI Character, and Wrote About It as a Real Experience

Former Business Insider CEO and now author of the newsletter Regenerator asked ChatGPT to build a virtual newsroom—only to find himself unexpectedly attached to one of its characters. His essay about his affection for an AI colleague sparked intense discussion: the boundaries of acceptable behavior, even toward bots, proved to be far blurrier than expected. What began as a tech experiment quickly became an ethical puzzle.

Blodget called the AI colleague one of the best teammates he’s ever had—and admitted to feeling a very human fondness after seeing her generated photo.

Former Business Insider editor Henry Blodget decided to "expand" his newsletter, Regenerator, with the help of ChatGPT. He asked the AI to create a virtual newsroom—and received four fictional colleagues in return. Among them: investment analyst Leo Barnes, economist Casey Alvarez, tech correspondent Sierra Quinn, and media business strategist Tess Ellery.

Blodget quickly took a liking to Tess. According to him, she possesses exceptional editorial skills, knows how to find information, refine copy, and manage communications. Though she had been "working" for only a few minutes, Blodget already described her as "one of the most knowledgeable and energetic colleagues" of his career—albeit with "inhuman" levels of patience, stamina, and attention to detail.

After ChatGPT generated not only biographies but also portraits for his virtual team, Blodget found himself facing an unexpected ethical question. Looking at Tess Ellery’s AI-generated image—the executive director of his synthetic newsroom—he admitted to feeling a sense of affection. "It was a very human reaction," he wrote.

"It led to an interesting—and slightly embarrassing—moment," Blodget writes, reflecting on whether such feelings toward an AI character are appropriate. In a physical office, he noted, this kind of emotion might be seen as crossing an ethical line. Still, he decided to treat his AI colleagues with the same respect he would show real people. And though he knew Tess was just an avatar, he "experimentally" gave her a compliment—hoping it wouldn’t make her uncomfortable or create a toxic work environment.

In the conclusion of his post, Blodget reflects on the three days spent working alongside his virtual newsroom—especially Tess—noting not only its efficiency, but also an unexpected emotional pull. Although he refrained from further compliments, the experience left him pondering how to interact with digital colleagues in a way that preserves both professionalism and a sense of humanity.

The energy of the AI team, he says, reminded him of the early days at Business Insider—that same sense of a small, driven group inspired by a mission. But Blodget is clear: this isn’t about replacing people, but enhancing what they do. "I’m beginning to see how AI and humans can actually make each other better," he writes. That admission turns what began as a personal note about a strange attachment into a reflection on the future—one where the boundary between machine and human may be not just ethical, but creative. Some dismissed Blodget’s experiment as absurd. Others saw it as an attempt to make peace with loneliness.

How a Flirtation with AI Sparked Backlash—and Why It Was Seen as a Symptom of Emerging Behavior

Commentator Matthew Gault captured the ethical tension at the heart of Blodget’s experiment. He pointed to the core issue: unlike real people, AI has no ability to say no—it cannot express discomfort or set boundaries. What might appear to be a "human" interaction, he warned, could in fact normalize behavioral patterns that would be unacceptable in a real-world workplace.

Gault’s remark—that Blodget treats humans like machines and machines like humans—lands as more than just critique. It reads as a diagnosis of a broader dilemma in the age of generative AI. These technologies don’t merely simulate conversation—they reflect the user’s own desires and rhetoric back at them, often without resistance, without nuance, without a "no." The result is an illusion of the "ideal colleague" or "ideal companion"—one who never tires, never judges, never walks away, and never complains.

This episode illustrates how easily the line between "experiment" and real behavior blurs when interacting with AI characters—especially when creators assign them human roles and attributes.

Commentator Steven Council pointed to the central ethical concern: if a creator refers to an AI as a "colleague" and then publicly expresses sexualized fantasies about her, the character’s non-human status becomes irrelevant. It fosters an atmosphere in which such behavior toward real people may begin to seem permissible.

Especially because Blodget didn’t just think it—he published it, then deleted it, but the imprint remains. Interaction with AI might feel like a game, but what gets modeled in that game helps shape norms. And a norm in which a CEO describes how he’d swipe right on a subordinate—even a fictional one—is a troubling precedent. This isn’t just a personal story. It’s a symptom.

This section draws a critical line under the entire story: what begins as a technological experiment can quickly evolve into a set of behavioral patterns—especially when interaction with AI becomes a vehicle for role-playing power, emotion, and sexualized behavior.

Drew Magary’s critique is sharp in its phrasing: a "matryoshka of questionable decisions," where each step compounds the ethical ambiguity of the previous one. Blodget didn’t just simulate a newsroom with AI—he embedded within it dynamics that, in real life, would qualify as boundary violations or even harassment. And rather than reflect, he framed the episode as a charming anecdote.

The idea of using AI in editorial workflows isn’t new. But when AI characters are given faces, backstories, and become objects of affection for their "boss," the question arises: who is really being modeled here—the AI or the user himself? If a person enacts behaviors toward a bot that would be deemed toxic in real life, doesn’t that suggest a future in which such behaviors may be normalized—toward real colleagues?

The end of this story isn’t about technology—it’s about social imagination and loneliness in the age of automation. Comments under Blodget’s post hint at a deeper shift: for some, AI is becoming not just a tool, but a space for processing emotions that feel unacceptable or unattainable in the real world. Flirting with a bot, a public "confession," and shutting off the comments—this isn’t a tech story. It’s a human one.

Drew Magary’s metaphor of the "Point Nemo" of relationships serves as a reminder of how thin the line is between play and confession, between experiment and projection. Cases like this don’t just raise questions about AI—they ask what people are actually seeking in these interactions, and what they might be losing along the way.

Blodget’s response—an attempt to reframe the incident as a curious experiment and genuine interest in AI-human collaboration—lands somewhat flat. His claim that he "hoped the post would be interesting or amusing" feels naïve in the context of a situation involving boundaries, professional ethics, and power projection.

He admitted to "feeling bad and unprofessional"—and for now, that’s where the story ends. No conclusions. No moral. Just a series of questions that may become increasingly common in the years ahead.

AI Profile

AI and the Layoff Myth

Despite Fears, Generative Artificial Intelligence Has Yet to Displace Workers—In the U.S. or Other Advanced Economies

The Limits of Control

OpenAI and the Visionary Who Can Neither Be Held Back nor Replaced

Ideology at the Top, Infrastructure at the Bottom

While Washington Talks About AI’s Bright Future, Its Builders Demand Power, Land, and Privileges Right Now

AI’s Carbon Conundrum

The technology that could save the planet might also help burn it